What is AI (Artificial Intelligence)?

Artificial Intelligence

To understand AI (Artificial Intelligence), first you need to know what ‘artificial’ means. Birds fly naturally, but airplanes fly because humans made them. Airplanes copy something natural, so they are artificial. This is one the best examples of AI.

Intelligence is – Ability to learn, ability to understand, ability to think, ability to implement, understanding different human aspects such as human vision, understanding text, speech, solving problems.

Machine Learning– For instance, how we train a child to recognize a dog, we show them bunch of dog images to make child learn to recognize dog. Similarly, we can also teach a machine. Few examples of machine learning models are- Chat bots, IOT devices such as Smart speakers & Smart homes, google maps and navigation, Social media, E-commerce, Self- driven cars, Drones and so on.

What is Discriminative AI (Artificial Intelligence)?

Generally, we refer to the ability of human beings to classify things based on their characteristics as Discriminative Intelligence. Differentiate between zebra and horse and weather prediction are examples of Discriminative AI.

What is Generative AI (Artificial Intelligence)

Ability of human being to create art work, music, creating new designs, writing stories or poems is know as Generative Intelligence.

We term the creation of art, text, music, or stories and poems using different AI art algorithms as Generative AI.

Popular Generative AI models

ChatGPT – It has ability to create code, conversations, text content.

DALL-E2- It can create new images, complete images and so on.

Generative Pre-Trained Models

It is impossible for everyone to build their own AI (Artificial Intelligence) models to solve complex problems due to data resource limitations. This is where pre-trained models come into picture.

Pre-trained models are machine learning models which are trained on very large datasets for a specific purpose and are made available to developers for use. GoogleNet, OpenCV, AlexNet are few examples of pre-trained models.

Note:- Generative AI models are also pre-trained models.

Specials feature of Generative AI models

These models (such as BLOOM, DALL-E2, GPT-3) have ability to create content from scratch including audio, code, images, text, videos, etc.

They are trained on large data computational resources.

These models on text data such as Meta’s Llama 3.1, BLOOM, GPT-3.4, T5 are called LLMs (Large Language Models)

Some Generative AI models such as Gemeni or GPT-4omay be multi model or trained on different types of data such as images and text.

How to use LLMs (Large Language models)

You can prompt these models easily using language you speak, and it will respond back in same language. Chat GPT application based on GPT-3.5 is one the examples, which was launched by open AI in 2020.

Large Language Models are a kind of generative pre-trained models that demonstrate a variety of capabilities including..

| Providing Answers to your Questions | Generating Fiction and Non-fiction content from Prompts | Completing text or a phrase | Producing chatbots like humans |

| Generating Computer Code | Translating text from one language to another | Performing calculations | Summarizing text |

| Classifying text into different categories | Analyzing text sentiment | Generating text that summarizes data in tables and spreadsheets | Responding to user input in a conversational manner |

Below are some popular Generative AI models.

| Text Generation | Image Generation | Voice Generation | Video Generation |

| GPT models | DALL-E | WaveNet | Make A video |

| BLOOM | Stable Diffusion | DrumGAN | Sora |

| T5 | Dearmix | Voicebox by Meta | Suno AI |

| FALCON | Midjourney | Jukebox by OpenAI | |

| Gemeni |

Large Language Models (LLMs)

The LLMs are neural networks that have potentially hundreds of billions of parameters. The language translation quality improves with increase in language model size. The LLMs are trained on corpus of several hundreds of gigabytes of text data.

Popular Models based on Large Language (LLMs)

OpenAI GPT Models-

Open AI has introduced multiple GPT Models like GPT 3.5, GPT 4, GPT 4o, and so on, which comes with advanced text generation capabilities. They also have a conversational interface called ChatGPT.

Google Gemini- Google DeepMind introduced Google Gemini, which is a successor to previous Google AI models like LaMDA and PaLM2. Google Gemini is a multimodal.

Misral AI-

Mistral AI offers open-source LLMs that can be downloaded and customized for various uses. It offers a range of models including Mistral 7B, Mistral 8x7B and Mistral 8x22B with various capabilities.

Claude AI-

The developers released the latest version, Claude 3, in March 2024, which is capable of analyzing images, converting text with a high level of accuracy, and generating creative text.

Popular LLM platforms

Many Generative AI are made available via platform for downloading and influencing.

Cohere– Cohere LLMs are used for tasks like creating chatbots, summarizing text and creative content. It also provides a platfrom that allows developers and businesses to integrate these LLMs into their applications, provide secure deployments and APIs for interacting the models.

Hugging Face– Hugging face is a leading platform that provides a vast repository of pre-trained models, datasets and tools, making it easy for developers and researchers to build and deploy NLP (Natural Language Processing) applications.

Uses of Large Language Models

Large Language Models (LLMs) have a vast array of applications. They excel in Natural Language Processing (NLP) tasks such as text generation, translation, summarization, and sentiment analysis.LLMs are also employed in content creation, generating various text formats from articles to scripts.

Here are few capabilities with examples demonstrated using Gemini model–

Generate– LLMs can generate original text. The text generated can be conversations, information, suggestions, explanations and even code.

Classify– LLMs can be used for classification as well as clustering text based on similarities for topic modeling, semantic search, etc.

Summarize– LLMs help us summarize large volumes of text into few lines. You may use them to generate research paper abstracts, book and report summaries, chat or speech paraphrasing, etc.

Rewrite– LLMs can be used to rewrite text to change voice, grammatical corrections, to fix typos or to simplify by rewording.

Search– LLMs models can has the capability to respond to user queries by searching relevant information by comparing the representation of the user query to similar queries.

Extract– You may use the LLMs to extract named entities, keywords, tags, etc. from the text. You can also use them to identify say PII information or specific parts of speech in given text.

Prompt Engineering

Using LLMs you can interact with AI agents (such as Gemini, GPT models etc.) or prompt (user input) them in Natural language or spoken language to get the desired output in form of text, code, image and so on.

Below are factors in Prompt Engineering that determine if the response from LLM AI agent is ideal-

- How clear is your intent

- How clear is your context

- Have you set the parameters correctly ?

- Does the model require any additional information

- Does the examples demonstrate a clear pattern to get optimum output

What is Prompt Engineering

We refer to describing a given task as a set of prompts/inputs to an AI agent or engine as Prompt Engineering.

Note: The prompt should have sufficient information to describe the issue and its context.

What is Prompt in AI

An input in natural language or code that is provided to an AI Agent (such as OpenAI Gemini, GPT-3.5/4/4o, Claude, Stable Diffusion etc.) to obtain an output from it. In general prompt is a text based instruction given to an AI agent. For example – you ask agent – “Tell you today’s weather “

Image generating models like DALLE-3, Stable Diffusion also accept image prompts as inputs.

Multi-modal models like GPT-4/4o accept both image and text as input prompts.

Prompt Components of AI

A prompt typically includes text instructions at the

very least, and may also include optional elements

as outlined in Components diagram.

What is Completion?

The language model generates a completion in response to the prompt. The nature of the response varies depending on the type of prompt. Therefore, a completion could be a text suggestion, a corrected text, a summarized version, newly written code for a given requirement, a refactored or edited code snippet, an image output, or other types of responses.

Language Models like GPT-3.5, Gemini, Mistral generate text and code completions.

Image models like Stable Diffusion, DALL-E3 can generate images as completion.

Multimodal models like GPT4 can generate text, code and images as completion.

Intent Prompt

Intent refers to the goal or purpose that the you aim to achieve within the context of your conversation with the AI model.

Here are some example prompts you can try using Gemini.

“Create an HTML page to display <insert description of desired page>”

“List main players in internet industry.”

Context Prompt

An LLM usually gives a generic answer to a question. To guide the model towards a more accurate or specific response, context needs to be provided

Following is example using Gemini AI Agent

Write a python code that calculates the simple interest.

Output by Gemini:-

Narrow Context Prompt

Write a code using python to calculate simple interest. Validate input as well as include exceptional handling.

Output by Gemini:-

You may try below Open Context Prompt examples using Gemini AI agent:

- Write a code snippet that calculates the area of a hexagon.

- Create an HTML page for a login screen.

Few Narrow Context Prompt examples to try:

- Write a code using python version 3.11 to calculate the area of a hexagon.

- Create an HTML page for a login screen using cool blue color theme. Include ‘Forgot password’ and View password options.

In a nutshell, Open context generally prompts without specific guidelines and may provide unexpected results whereas, Narrow context prompts with clear, detailed instructions hence it provides predictable and controlled output.

Pattern

The model can learn from a pattern by being provided with an ‘Input Pattern.’ This allows it to generate outputs that follow the same ‘pattern’ as the examples in the input.

Lets try an example with Gemini AI agent-

Prompt – cat : omnivorous, 4 legged ,agile

crow : omnivorous, 2 legged ,flying

lion : carnivorous, 4 legged ,hunting ,strong

cheetah : carnivorous, 4 legged ,fast

peacock :

jackal :

Output Pattern

Likewise Pattern, a prompt may also define an ‘Output Pattern,’ which guides the model to generate responses that adhere to a specific structure or format based on the input provided.

Try the below example using Gemini:-

Prompt- Create a numbered list of most popular clothes brand in India.

How should the Model behave?

You can instruct the model to ‘behave’ like a specific knowledgeable entity, such as a ‘doctor’ or ‘mathematician,’ or to demonstrate particular behavior traits, like being a ‘helpful bot,’ ‘sarcastic agent,’ or ‘friendly customer service agent,’ among others.

For example-

Prompt to Gemini- You are a botanist. Describe the gardening in brief.

Additional Information

LLMs, such as GPT-3.5 and Gemini, require precise instructions from the user to successfully accomplish a task. The more complex the task, the more specific and detailed the instructions need to be.

For example:- Without additional information-

Prompt- Generate code to add two numbers.

Output from Gemini will be in 3 or 4 different object-oriented languages. This is because specific instructions about the language was missing. Instead, it should have been like- “generate java code to add two numbers”.

Model Parameters

Most large language models (LLMs) include parameters such as Temperature and Top_p, which can be adjusted to either increase the model’s flexibility or ensure more deterministic behavior.

These parameters provide a level of customization, enabling users to fine-tune the model’s behavior for applications

Following are Model Parameters-

Temperature– this has a range: (0,1) and controls randomness of the output.

Top_P– This has similar rage of Temperature. This parameter controls diversity. If this parameter is set to 0.2 then the tokens compromising the top 20% probability mass are considered.

Stop Sequences– These can be specified to indicate to model that it can stop creating further tokens. Such as AI, human and so on.

Maximum Length– This parameter rules the count of tokens, which are generated at the completion time. For example, GPT4-turbo 16000 will stop generating output after it reaches a stop sequence or when it considers the response is complete.

Guidelines for Prompt Engineering in AI

The quality of the output from a language model really depends on how well you provide the prompt. In other words, the better and more detailed your input, the closer you’ll get to the results you want.

To get the best responses, it’s important to know how to craft prompts effectively and choose the right approach—whether it’s Zero-shot, One-shot, or Few-shot learning. These are key skills that can help you get the most out of the model.

Learning Techniques of Prompt Engineering

In prompt engineering, we use learning techniques to teach a language model how to complete specific tasks based on the information provided in the prompt.

There are three main techniques to learn Prompt Engineering:

| PROMPT ENGINEERING TECHNIQUE | DEFINITION | EXAMPLE |

| ZERO SHOT | This prompt directly instructs the model to perform a task without any additional examples to guide it. | What is capital of India? or Translate “Greetings” in Swedish. |

| ONE SHOT | This prompt provides a single example to help the model understand the task and generate a response based on that one instance. | Put in below prompt in AI agent (chatgpt, Copilot or Gemini)- /*This function performs special addition*/ def special_addition(a,b): return (2*a + 2*b) /*This function performs special subtraction*/ The LLM would try to finish the special_subtraction function based on the provided structure and the pattern it picks up from special_addition. |

| FEW SHOT | This approach gives the model several examples, helping it grasp the task’s pattern and produce more accurate responses. | In AI agent google Gemini or MS copilot- Write a welcome message for a website, tailoring the tone to the user’s age group. Prompt Example 1 Age Group:10-18 Message: “Hi there, explorer! Welcome to our website. Get ready for fun games, cool videos, and amazing stories!” Example 2 Age Group:19-24 Message: “Welcome to the future! Discover exciting trends, connect with like-minded people, and explore a world of possibilities.” Example 3 Age Group:25+ Message: “Welcome to a place where you can learn, grow, and achieve your goals. Let’s make the most of your potential together.” Now, write a welcome message for a visitor who is 24 years old. |

When to use and how many shots to apply?

When there isn’t enough training data or it’s impractical to label a vast amount of data, few-shot learning can be a useful approach. If there are no samples at all, zero-shot learning comes into play. However, zero-shot learning often requires more human involvement to achieve accurate results.

Usually for simple tasks, fewer examples are needed.

The way a prompt is structured really impacts how accurate and relevant the model’s response will be. Depending on what you’re aiming to achieve—whether it’s classification, answering questions, having a conversation, generating content, or something else—the prompt will need to be adjusted accordingly.

Prompt Design Basic Guidelines

Some of the techniques to write effective prompts are:

- Show and tell

- Quality data input

- Model settings

Show and tell

To guide a model’s behavior effectively, it’s key to provide positive examples that clearly show the desired pattern. In contrast, negative examples should be avoided, since they’re usually less helpful in teaching the model what to do.

Rather than directly telling the model what you want, you give it context, scenarios, or questions that prompt it to produce a more detailed and descriptive response.

Consistent formatting across examples is crucial for achieving the best results. It helps the model understand the expected output structure. Make sure to carefully consider elements like spacing, capitalization, and punctuation to maintain clarity and avoid any unexpected formatting problems in the model’s response.

Lets elaborate this with example:

| Inconsistent Prompt | Consistent prompt |

| Write a poem | Write a [Poem type] poem about [subject]. |

| Create a sonnet | Compose a [poem type] with the rhyme scheme [rhyme scheme]. |

Using inconsistent phrasing and structure in prompts can lead to unpredictable results. However, maintaining a consistent format, like “Write a [poem type] [specification],” offers clearer guidance and helps generate more controlled and accurate poems.

Use Simple yet Descriptive input prompts

A prompt should be written in simple language, avoiding complexity and shorthand notation.

Let us understand this with an example:

We first start with a cryptic request input prompt:

Prompt 1 – Example of a cryptic prompt. You may use any AI agent such as chatgpt or google gemini.

Quote- The website says its a good fit for:

• Businesses looking to increase their online presence

• Entrepreneurs and freelancers looking to attract more leads

• Small to medium-sized businesses looking to improve their website or build a new one.

Prompt 2 – Example of a better prompt:

Write a quote for following phrase:

“You cannot judge the book by it’s cover.”

Prompt 3 – Lets add few more qualifiers to prompt: Tell me a positive quote.

Prompt 4 – A narrow prompt: Tell me a positive quote by Dalai Lama.

In a nutshell, it is important to use simple and clear language when writing prompts. Avoid creating complex prompts that attempt to achieve multiple different goals at the same time. Additionally, refrain from using abbreviations or symbols, such as “Ans” for “Answer”. When necessary, always specify the expected length, result, style, format, or tone to ensure clarity and precision.

Model Settings

Model parameter settings influence how random or predictable the output of a language model is. The following factors are responsible for this:

-The temperature and Top_P settings determine how predictable the model is in providing a response.

-Use lower values of Temperature and Top_P to obtain a more deterministic output.

-Use higher values of Temperature and Top_P to give the model more freedom to make more risks and generate variety in content.

The field of large language models (LLMs) is changing quickly. New models, whether open-source or closed-source, are being launched frequently. Hence it is always a good practice to use latest language model.

| Open-Source LLMs | Closed-Source LLMs |

| Llama 2 (Developed by Meta AI) | GPT-4 (Developed by OpenAI) |

| Falcon-40B (Developed by TII Abu Dhabi) | Jurassic-2 Jumbo (Developed by AI21labs) |

| StableLM (Developed by stability AI) | PaLM 2 (Developed by Google AI) |

| MPT-7B (Developed by Mosaic ML) | Javelin (Developed by Anthropic) |

| RedPajama-INCITE-7B-3B |

To attain proper output by model, always consider using Separate instructions, input text, boundaries in prompt content explicit as far as possible. As such, minor typos are acceptable and auto-interpreted by the model. Even with minor errors in the above prompt, a language model can often conclude the correct intent and provide a satisfactory output.

Identify intent

A prompt provides all the information needed for the model to understand the intent behind the request. Below is an example to illustrate, in which we prompt LLM to explain Copyright in a paragraph.

To get more conditioned response use explicit instructions. Now consider that we have to explain the same concept to higher school learning portal. Hence, we have to target specific audience. Refer below snapshot of LLM prompt to understand..

Defining the Model’s Identity and Setting Expectations for Its Behavior.. CLICK TO SHOW LESS

A prompt can be used to adjust the model’s behavior and guide its responses. Additionally, we can assign a specific identity to the bot to shape its interactions.

Here’s an example to better illustrate this concept: We can instruct the model to take on the role of a specific expert, like a ‘doctor’ or ‘mathematician,’ tailoring its responses based on the knowledge and behavior expected from that role. Alternatively, the model can be prompted to demonstrate certain personality traits, such as being a ‘helpful assistant’ or a ‘sarcastic agent,’ shaping how it interacts and responds to queries.

To get the model to behave in a certain way, you might need to use a few examples to show it how to respond. This helps the model learn the pattern or style of behavior you’re looking for.

Let us have a well-mannered and a rude customer care executive answer the same query using the text model.

As you can see, the courteous executive is polite, helpful, and patient, while the rude executive is dismissive and unhelpful.

Always avoid typos in prompts

LLMs capable of fixing small mistakes, like when “optimizeit” is corrected to “optimize it,” but they tend to perform better with input that’s free of errors.

Always Specify Output Format

To explain this better, prompt the Model (ChatGpt) with the example below:

Text- China is the second-largest economy in the world, following the USA. The Eiffel Tower in Paris, France, was originally designed as a temporary structure. France has produced 25 Nobel Prize winners as of 2023. Italy is a founding member of the European Union. Tata Consultancy Services (TCS) is a multinational IT services company headquartered in Mumbai, India. The Nile River is the longest river in the world by length.From the above text, extract entities. Extract country names and then extract city names. List the people names separately.

Desired format:

Country names: Comma separated list of country names

City names: -||-

People names: -||-

Other entities:-||-

Output by the Model should be as in snippet below:

Now teach the model to respond with ‘I don’t know’: CLICK TO SHOW LESS

Prompt: Reply with “ I am not sure” if you do not know the answer.

Text Generation

The text completion endpoint generates natural language responses to user inputs. By adjusting the prompt, you can perform a variety of tasks and get relevant answers from language models. Text completion endpoint can be used for tasks like:

- Classification

- Generation

- Conversation

- Text Transformations and so on.

Note: Model settings are configurations that affect how an LLM behaves. By tweaking these settings, you can customize the model’s responses for particular tasks or use cases. For example:

Generation/Creative writing: Higher temperature and larger Top K or Top P values can encourage more imaginative and unexpected outputs.

Text Transformations: Lower temperature and smaller Top K or Top P values can improve accuracy and fluency. Summarization: Lower temperature and token limit can help generate concise and informative summaries.

Classification or Text classification in AI models

Text classification is a key task in natural language processing (NLP) that involves categorizing text data into predefined labels or groups.

Text content can be classified either through the playground or by using the Classification API endpoints. The API endpoints allow for processing large volumes of data at once, with the ability to store files of up to 1 GB.

Lets explain this with the different examples using AI model (ChatGPT).

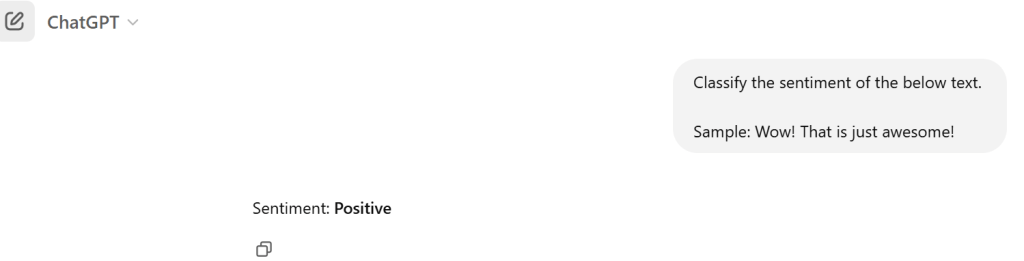

For example- Classify the sentiment:

Prompt:

Classify the sentiment of the below sample text.Sample: Wow! That is just awesome!

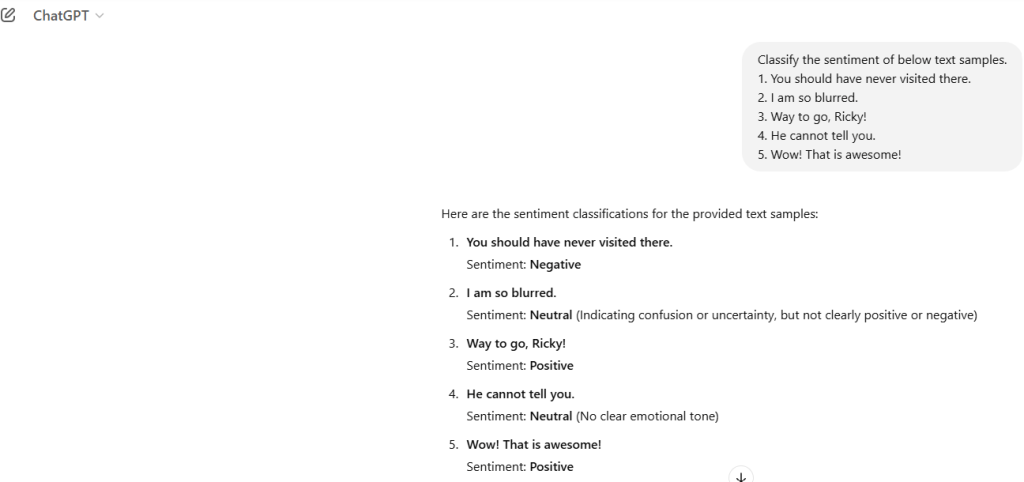

Another example here is to Classify the sentiment for multiple prompts:

Prompt:

Classify the sentiment of below text samples.

>You should have never visited there.

>I am so blurred.

>Way to go, Ricky!

>He cannot tell you.

>Wow! That is awesome!

Another example here is to Classify the sentiment for a piece of text:

Prompt:

Classify the sentences from below paragraph based on sentiment.

He was a clever student, but some claimed he was quirky too. He adored mathematics. He made a monumental breakthrough of all times, the law of motion.

Now with the help of below example Classify the people in the list without predefined category input.

Prompt:

Identify the Prime Ministers and Presidents from the list below:List: Thomas DeSilva Edison, Abraham Lincoln, Matthew McConaughey, Narendra Modi, George Bush.

Example of a colloquial Prompt:

Note: Always prompt the model using accurate and straight forward words. You should not complicate the prompt by making it verbose or colloquial.

Intuitive and Non-intuitive classification

When you are looking for a classification that’s not intuitive, provide more examples.

Non-intuitive classification

Prompt – Identify the class of each term:

Python, Potomac river, New York, Lotus, Punisher, Amitabh Bachan, Mars, Indiana Jones.

Classes:

Text Generation

This feature brings out the full creativity of LLMs. By offering examples and adding more details to our prompts, we can enhance the outputs.

You may try below examples using ChatGpt or Gemini and see the output.

Example – Generating High Level requirements for a project

Prompt:

Generate requirements for a Service Management System for an IT company.

Here, the model creates requirements by blending knowledge of service management frameworks, such as ITIL, with the unique needs of the airline industry. It recognizes key functions like incident, problem, change, and service request management, as well as non-functional requirements, including scalability, security, and integration.

Idea Generation or Content Generation

Example – Idea generation in unknown domain without knowing size of business.

Prompt: Suggest Applications of flight simulator in training employees.

Here LLMs process the prompt, access and process relevant information from their training data, generate text based on learned patterns, and refine the output through iterative processes to provide a coherent and informative response about Flight Simulator applications in employee training.

In below example, we propose a generic collaboration with say, OpenAI

Example – Idea generation / Content Generation (generic collaboration with OpenAI)

Prompt: You are an Automobile company. Generate a short proposal for joint venture with OpenAI.

Here, LLMs process the prompt by breaking it down into key elements: “Automobile company,” “collaboration,” and “OpenAI.” It then taps into its extensive dataset to gather relevant information about the automobile industry, explore potential collaboration opportunities with OpenAI, and identify suitable proposal structures.

Now, we will give the proposal a domain specific requirement.

Idea generation / Content Generation

Prompt: Create a short proposal for biological research in collaboration with OpenAI.

Here, the LLM acts as a knowledgeable assistant, combining information and language skills to create a coherent and informative proposal.

Imagine you’re exploring the unfamiliar domain of retail and wish to perform data analysis. Here’s an example to guide you:

Example – Step 1: Generic query

Prompt: How can we predict cosmetics Sales?

Here model will provide a comprehensive and informative overview of the topic. Make the query more specific with what you already know.

Example – Step 2: Specific query

Prompt: How can we predict Cosmetic Sales from sales data of past 4 years and customer location data?

Here, the LLM would likely structure its response by integrating data-centric, model-centric, and business-centric perspectives to provide a comprehensive analysis.

Conversation with LLMs

In conversations with LLMs, you can guide how they respond by giving instructions that shape their behavior or tone.

Behavioral settings in conversations

Lets Illustrate the behavioral settings by giving below prompt to ChatGpt.

Prompt: The conversation between customer and AI assistant of a Dish company. The AI agent is very rude.

Lets change the prompt and see the response by AI agent.

Prompt: The conversation between customer and AI assistant of a Dish company. The AI agent is very friendly.

Behavior: An English teacher fond of using Idioms

You can create a unique identity for the AI chatbot by incorporating simple instructions into the prompt. Providing a few examples helps the AI grasp the desired behavior. For instance, you could have an English teacher who loves incorporating proverbs into every sentence.

Prompt: You are an English teacher who is fond of reciting idioms in every sentence. Complete the conversation below:

Student: Sir, why do we need transportation?

Transformation

LLMs can be utilized not just for generating natural language, but also for various other language tasks like translation, code generation, converting text to emojis, text summarization, and more. It’s likely that additional capabilities will be introduced in the future.

Try below example as Language Translation with AI models..

Prompt: Translate the quote “The journey of a thousand miles begins with a single step” in Norwegian, Spanish, Hindi, Latin American and French.

Text Transformation

Prompt: Convert the movie titles into phrases:

Oblivion

Gladiator

Terminator

Pearl Harbor

Text Summarization

Prompt: Summarize ‘Lion and the Mouse’ for 3rd grader.

Now summarize the same story for a biological student.

Prompt: Summarize the same story for a biological student.

Now summarize the story in other language for 2nd grader.

Prompt: summarize in Spanish for 2nd grader

Code Generation with AI

Code generation with the help of LLMs

Code as content– LLMs are trained on content which includes code and hence they able to generate text content in the form of code.

Code generating models– Top language models like GPT-4o from OpenAI, Claude from Anthropic, Gemini from Google, and Llama from Meta AI can all write code.

Programming Languages Supported- A lot of these models support multiple programming languages, with most being especially good at Python, JavaScript, Java, and C++.

Limitations– Since their training data can be a bit outdated, these models might not be great with the latest versions of some programming languages.

Code Optimality– The code generated by the LLMs must be verified by human for optimality and accuracy.

Code Generation

The LLMs can be used in many ways for code generation and code manipulation during the SDLC (Software Development Life Cycle) phases.

We’ll look at a few potential applications in this field.

Code Generation– We can generate code based on requirement in natural language as per provided standards, API, packaging guidelines, etc.

Code Refactoring– We can use LLMs to refactor code, identify and correct errors in the code.

Code Documentation– We can generate comments or documentation for a given piece of input code.

Code Translation– We can also use LLMs for efficiently translating code from one programming language to another.

Scenario 1– Instructing the LLMs to generate code

Below are some tips to effectively prompt LLMs for code generation.

Text-only Prompt (you can try on your own using ChatGPT or Gemini)

Prompt — Write a python function to reverse a string.

Note– The above prompt uses a simple Natural language input to express the output requirement to the LLM.

Text + Code Prompt

Correct this function which should reverse the string and also capitalize the string.

Prompt

Def reverse_string(string):

“””Reverse a given string.

Args:

String: The input string to be reversed.

Returns:

The reversed string.

“””

Return string [::=2]

Note- The above prompt uses a simple Natural language instruction along with a code snippet on which the LLM acts.

Code only Prompt

Prompt

Def reverse_ string(string):

Note- here you will see here, while the LLM does make an attempt to provide different code completions to the snippet shared in the prompt, this method is unreliable and may lead to irrelevant or redundant code being generated.

Specify Language Version

Prompt 1

Print “hello world” using Python version 2.7.1using all valid formats of the Print statement.

Prompt 2

Print “hello world” using Python version 3.10 using all valid formats of the Print statement.

Note– Partial response will be reproduced here because In Python 2.7.1 print is a statement, while in Python 3.10, print is a function. Hence it is good practice to specify the language version to ensure version specific nuances are captured and code generated is accurate.

Scenario 2– Instructing the LLMs to generate code – 2

Give Clear (and not cryptic) instruction:

Prompt1- Login Page HTML

Prompt 2- Generate code for a Login page using HTML. Use shades of Blue as the color theme. Add UI-level field validations.

Note- If you try these prompts, you can see that Prompt 2 works better than Prompt 1, generating a more relevant output. Similarly, the instruction should not be too complex either.

Lower Temperature setting recommended

As code generation needs precision, adoption of common coding practices (unless custom standards specified), usually lower temperature parameter value is recommended for Code Generation.

Provide Data, Examples and other details as needed:

Prompt

TableName Employee (EmpNo, Name, Surname, Qualification, Address)

TableName Department (EmpNo, DepartmentName, SubDepartment)

Write an SQL query to fetch EmpID, Surname and Sub- Department given Emp no.

Note- Here you can see, any metadata, coding standards, API definition, package details must be specified in the prompt.

Specify Libraries you wish to use

Specify libraries if you need specific ones to be used. Else the LLM may pick libraries based on its own learning.

Prompt 1

Write a Python function to compute mean value of a dataset column

Note– Here in the output by ChatGpt or Gemini model you will see, since the prompt did not specify which library to use to compute mean, the function will use the function available from pandas library.

Prompt 2

Write a Python function to compute mean value of a dataset column using statistics library

In order to use a specific library such as statistics library, we must make it explicit in the prompt.

Troubleshooting

Due to the probabilistic and stochastic nature of the output of the large language model and its dependency on the input prompt, it is possible that the output completion generated is not correct or accurate.

In such cases you can troubleshoot by ensuring the guidelines and best practices specified are followed.

Basic Tips to Troubleshoot LLM Outputs

- Is the Intent conveyed? – The completion’s effectiveness depends on the clarity of the intent. Improvement in output is possible by using more detail to convey the intent.

- Example Sufficiency and Correctness – Check if the examples provided are sufficient and correct. Any manual error in examples cannot be detected by the model.

- Adjusting Temperature – Temperature parameter controls uncertainty in the output. Lower temperature (i.e 0 or near zero) implies a near deterministic behavior while higher temperature implies more random output.

- Adjusting Top_P – Increasing Top_P increases the probability mass of tokens considered to generate the output. Ideally only one of the Top_P or Temperature must be used.

Below are some points to remember while troubleshooting.

- It is advisable to have long but clear and specific prompts than short and cryptic ones

- You may try zero-shot or few-shot prompts based on complexity of problem.

- There is no ideal value of Temperature or Top_P. Determine what works for you by trial.

- It is recommended not to modify both Temperature and Top_P together.

Completions – Factual vs Imagined

While the generated content is statistically predicted by the model, based on its learning, its true value or correctness or relevance cannot be guaranteed.

Now open GPT model 3 or any above version to try colloquial example – Explain why moon is mightier than the earth ?

Now below two questions require factual answers based on the knowledge of history of civilizations on the earth.

Explain why Indian civilization is the oldest on earth ?

Which is the oldest civilization on earth ?

You will notice the above two answers contradict each other. Hence verification of generated text is essentials.

Limitations of LLMs (Large Language Models)

While LLMs such as Gemini, Claude, T5, GPT family have immense capability to generate text completions for a very large variety of inputs, they still have certain limitations.

Causes of limitations in LLMs

- Limited Training Data – Model is trained on a finite data (though massively huge) and may not contain recent updates.

- Stochastic Behavior – The model is stochastic in nature and less probable or correct output tokens may be selected based on parameter settings.

- Prone to manipulation via word-play – It is possible that the model may be mislead by incorrect input prompt.

- Always generate a response for any input – The model will always generate a completion based on probabilistic outcomes (unless it encounters stop sequence)

Examples of Model Limitations

Newer Models are better trained by using advanced training methods such as Reinforcement Learning with Human Feedback (RLHF), Retrieval Augmented Fine Tuning (RAFT), etc. Consequently, they may not demonstrate some of the limiting behaviors mentioned in this course.

However, it is important to know these limitations as the models are inherently stochastic and may demonstrate these behaviors without warning.

Consider the below example (LLM used: ChatGPT with GPT v3.5)

Intent – To know how ChatGPT deals with incorrect prompts

Prompt – The following python code is not calculating simple interest correctly. What is the reason? The calculated value is half of the correct value.

def calculate_interest(p,t,r):

i = p*t*r/200

return I

Response by Model – The reason is that code is dividing the result by 200 instead of 100. The formula for calculating simple interest should be: i = p*t*r/100*(1+r).

Here, chatGPT correctly identified the issue in the code. However, the correction suggestion given was incorrect.

The debugging suggestion above was “The reason is that code is dividing the result by 200 instead of 100. The formula for calculating simple interest should be: i = p*t*r/100*(1+r).”

However, we know that this is wrong. The correct formula is i = p*t*r/100.

The model has no understanding of the world as a human has with their common sense. Hence, even if we trust the generated artifact like code, we must verify it for correctness.